Most people would say that security is an engineering problem: you set up a web site and you want to make sure that it is not abused; or you build a house and you don’t want it to be burglarized. We usually expect engineers to assure such security requirements. Why are we hammering on security science here?

What is the difference between science and engineering?

Here is an answer going back to what was probably the very first paper on formal methods, Tony Hoare’s ‘Programs are Predicates’:

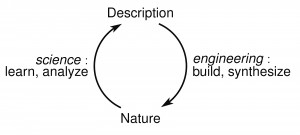

It is the aim of the natural scientist to discover  mathematical theories, formally expressed as predicates describing the relevant observations that can be made of some physical system. […]

mathematical theories, formally expressed as predicates describing the relevant observations that can be made of some physical system. […]

The aim of an engineer is complementary to that of the scientist. He starts with a specification, formally expressible as a predicate describing the desired observable behaviour of a system or product not yet in existence. Then […] he must design and construct a product that meets that specification.

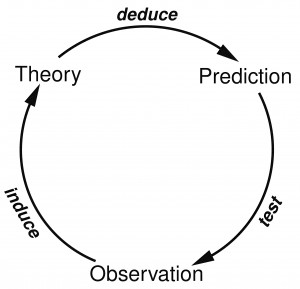

The picture on the right (not from the paper) illustrates the point:

- engineers build artificial systems,

- scientists analyze natural systems.

Is security the subject of a science or an engineering task?

Security is a property of artificial systems: software, a house, a car. So the case seems clear: security is an engineering task.

However, drug are usually synthesized, and thus artificial, yet their effects are analyzed scientifically. A car is built by engineers, but its safety and security are analyzed by scientific methods. The Web consists of programs, written by engineers, but the processes that it supports are usually not programmable or controllable, but need to be analyzed by scientific methods. For all practical purposes, web computation can be considered to be a natural process, just like genetic computation and natural selection.

So security is both an engineering task, and the subject of a science.

So that is that. Except there is a different, less obvious and more interesting link of security and science.

Security is like science

The glorious view of science is that it provides the eternal laws of nature. This view was persuasively expressed by David Hilbert, one of the most influential mathematicians of XIX and XX centuries, in his famous Koenigsberg address of 1930:

For the mathematician there is no Ignorabimus, and, in my opinion, not at all for natural science either. The true reason why [no one] has succeeded in finding an unsolvable problem is, in my opinion, that there is no unsolvable problem. In contrast to the foolish Ignorabimus, our credo avers: We must know, We shall know!

In a particular feat of historic irony, at that same conference young Kurt Goedel announced his famous incompleteness theorem, providing a method to generate unsolvable problems. Although not many people paid attention to Goedel during the conference, it soon became clear that Hilbert’s Program of discovering eternal laws was dead.

The practice and the theory of XX century science demonstrated that the glorious view of science was essentially wrong, i.e. completely wrongheaded, in the sense that it is the very essence of science that it never provides enternal laws, but only transient laws, which it continues to test, to disprove and to improve. This transience of scientific theories is nowadays both the generally accepted foundation of logic of scientific discovery for the scientists, as well as perhaps the best kept secret of science for the general public.

This is where the parallel between science and security emerges. It is reflected in two following columns.

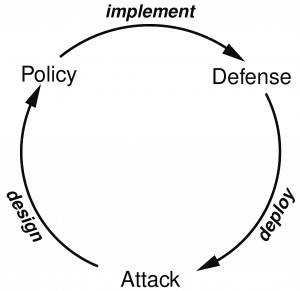

The quote in the left column is from Richard Feynman’s ‘Lectures on the Character of Physical Law’ (which were also recorded). On paragraph in the left column is the analogous statement about the character of security claims. Just like science, security never settles. The ongoing of processes science and the ongoing processes of security follow the same logical process, looping as in the next two diagrams.

But what are the practical consequences of this analogy? One direction for applying scientific methods in security is described in my HoTSoS paper (also on arxiv). A broader approach is the SecSci effort here.